An Interactive Real-Time System for Pose Classification in Children’s Yoga and Kavayat Exercises

Keywords:

Logistic Regression, Machine Learning, computer Vision, Human action recognition, Drill Exercises, Environmentally Integrated TechnologyAbstract

Objective: Vision-based motion identification and categorization of human movement through machine learning is a crucial aspect of various applications, including healthcare, surveillance, and sports analysis.

Methods: Through the analysis of movement data, the system differentiates between diverse actions with high precision, offering valuable insights for monitoring and analysis in real-time.

Results: This investigation demonstrates yoga and Kavayat (abbreviated as YK) that leverages machine learning techniques, specifically Logistic Regression, to precisely discern and categorize physical action patterns and classify with real-time feedback and inform the accuracy of the posture. For children, Yoga and Kavayat, sometimes called mock drill, improve the physical as well as mental health.

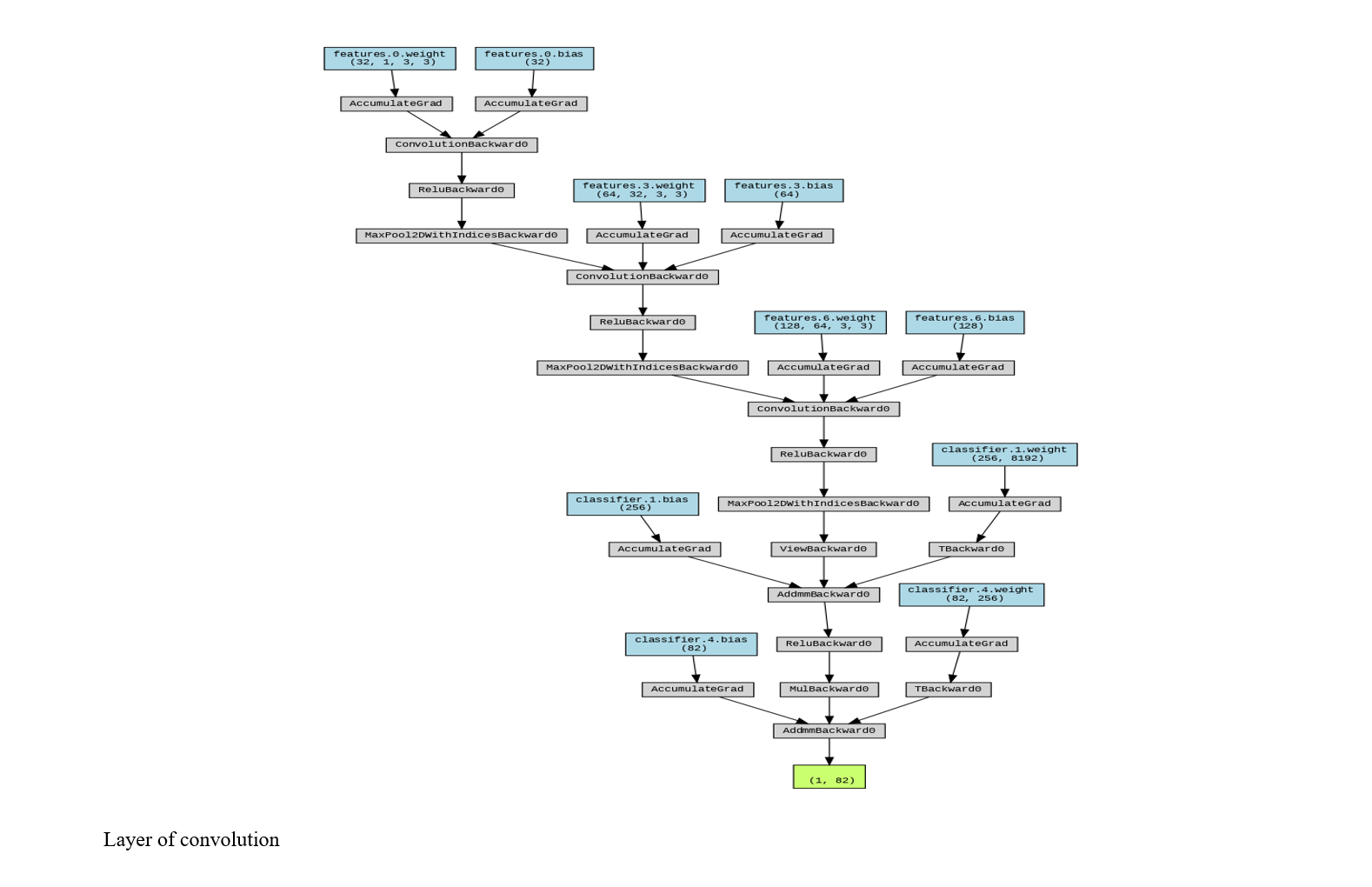

Conclusion: The lightweight model called PoseHeatMapachieves a remarkable 98.00% accuracy, demonstrating its capability to effectively detect and classify patterns of physical action and give real-time feedback.

Downloads

References

1. World Health O. Global Recommendations on Physical Activity for Health: WHO Press; 2010. 3_https://doi.org/DOI}

2. Cao Z, Hidalgo G, Simon T, Wei SE, Sheikh Y. OpenPose: Realtime Multi-Person 2D Pose Estimation Using Part Affinity Fields. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2021;43(1):172-86. [PMID: 31331883] [DOI]

3. Fang HS, Xie S, Tai YW, Lu C. RMPE: Regional Multi-Person Pose Estimation. Proc IEEE International Conference on Computer Vision (ICCV). 2017:2334-43. [PMCID: PMC5530199] [DOI]

4. Biswas S, Das P, Sinha S. Yoga posture recognition by extracting key joint points using OpenPose. Procedia Computer Science. 2020;167:2360-7.

5. Haque A, Milstein A, Fei-Fei L, editors. Measuring the Machine Learning Gap in Exercise Feedback. Proc Machine Learning for Healthcare Conference (MLHC); 2019. 3_https://doi.org/DOI}

6. Martinez J, Hossain R, Romero J, Little J, editors. A simple yet effective baseline for 3D human pose estimation. Proc IEEE Int Conf Comput Vis (ICCV); 2017. 3_https://doi.org/DOI}

7. Lanke WP, Bordoloi GR, Shrivastava A, Deshmukh SV, editors. Assessment of human activity recognition based on feature extraction prediction accuracy. Proc 4th Int Conf Intelligent Engineering and Management (ICIEM); 2023: IEEE. 3_https://doi.org/DOI}

8. Waheed S, Amin R, Iqbal J, Hussain M, Bashir MA. Deep learning-based framework for automated human action recognition and classification. Proc 4th Int Conf Comput Math Eng Technol (iCoMET). 2023. [PMCID: PMC10208255] [DOI]

9. Jo B, Kim S, editors. OpenPose, PoseNet, and MoveNet model comparison for mobile pose estimation2022. 3_https://doi.org/DOI}

10. Shah M, Gandhi K, Pandhi BM, Padhiyar P, Degadwala S, editors. Real-time and offline human pose estimation using computer vision and deep learning. Proc 2nd Int Conf Appl Artif Intell Comput (ICAAIC); 2023. 3_https://doi.org/DOI}

11. Chaudhary I, Singh NKT, Chaudhary M, editors. Real-time detection of yoga poses using OpenCV with MediaPipe. Proc 4th Int Conf Emerg Technol (INCET); 2023: IEEE. 3_https://doi.org/DOI}

12. Esmaeili B, Akhavanpour A, Bosaghzadeh A, editors. Surya namaskar: advanced real-time pose recognition and correction for healthcare. Proc Int Conf Mach Vis Image Process (MVIP); 2022: Univ. Tehran, Iran. 3_https://doi.org/DOI}

13. Chaudhari A, Dalvi O, Ramade O, Ambawade D, editors. Yog-guru: pose correction in yoga using deep learning. Proc Int Conf Commun Inf Comput Technol (ICCICT); 2021: IEEE. 3_https://doi.org/DOI}

14. Babanne V, Agarkar P, editors. Human pose estimation for yoga and kavayat drills: a comparative analysis. Proc Int Conf Emerg Smart Comput Inform (ESCI); 2024: IEEE. 3_https://doi.org/DOI}

15. Bai H. Training method for VideoPose3D leveraging action recognition concepts. Proc Int Conf Signal Process Mach Learn (CONF-SPML). 2021. [PMCID: PMC8173576] [DOI]

16. Gera R, Ambati KR, Chakole P, Cheggoju N, Kamble V, Satpute VR, editors. Activity classification in video streams using CNN with ConvLSTM. Proc 2nd Int Conf Paradigm Shifts Commun Embedded Syst Mach Learn Signal Process (PCEMS); 2023: IEEE. 3_https://doi.org/DOI}

17. Tsai MF, Chen CH. STV-GCN: spatial temporal variation graph convolution for skeleton-driven emotional action recognition. IEEE Access. 2021;9:1-12. [DOI]

18. Ji J, editor Action recognition based on skeletal pose estimation. Proc 2nd Int Conf Comput Data Sci (CDS); 2021: IEEE. 3_https://doi.org/DOI}

19. Lee M, Kim SB. Open-set human activity recognition via sensor-based representation learning with mixup triplets. IEEE Access. 2022;10:1-12. [DOI]

20. Ketsoi V, Raza M, Chen H, Yang X, editors. Secured human-computer interaction via hand action recognition. Proc IEEE Int Conf Syst Man Cybern (SMC); 2022: IEEE. 3_https://doi.org/DOI}

21. Patel HR, Doshi JT, editors. Recognizing human activities in dark videos using deep models. Proc Int Conf Artif Intell Mach Vis (AIMV); 2021: IEEE. 3_https://doi.org/DOI}

22. Sánchez-Caballero Á, Fuentes-Jimenez D, Losada-Gutierrez C, editors. Depth video-based recurrent networks for real-time human action recognition2022: Springer. 3_https://doi.org/DOI}

23. Ryu J, Patil AK, Chakravarthi B, Balasubramanyam A, Park S, Chai Y. Real-world action recognition using angular features for fine-grained unit actions. IEEE Access. 2022;10:1-12. [DOI]